Defining a computer

A general-purpose computer is one that, given the appropriate instructions and required time, should be able to perform most common computing tasks.

This sets a general purpose computer aside from a special-purpose computer, like the one you might find in your dishwasher which may have its instructions hardwired or coded into the machine. Special purpose computers only perform a single set of tasks according to prewritten instructions. We’ll take the term computer to mean general purpose computer.

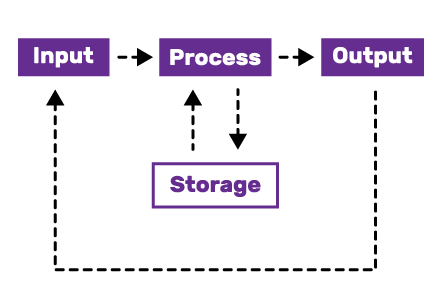

Simplified model of what a computer is:

Although the input, output and storage parts of a computer are very important, they will not be the focus of this course. Instead we are going to learn all about the process part, which will focus on how the computer is able to follow instructions to make calculations.

Supplementary Resources

Early computing (Crash Course Computer Science)

Early Computing: Crash Course Computer Science #1

- The abacus was created because the scale of society had become greater than what a single person could create and manipulate in their mind.

- Eg thousands of people in a village and tens of thousands of cattle

- In a basic abacus each row of beads (say its coloured) represents a different power of ten

- As well as aiding calculation, the abacus acts as a primitive storage device

- Similar early computing devices: astrolabe, slide rule, sunrise clocks, tide clocks

As each increase in knowledge, as well as on the contrivance of every new tool, human labour becomes abridged. Charles Babbage

- One of the first computers of the modern era was the Step Reckoner built by Leibniz in 1694.

- In addition to adding, this machine was able to multiply and divide basically through hacks because from a mechanical point of view, multiplications and divisions are just many additions and subtractions

- For example, to divide 17/5, we just subtract 5, then 5, then 5 again until we can’t do anymore hence two left over

- But as these machines were expensive and slow, people used pre-computed tables in book form generated by human computers. Useful particularly for things like square roots.

- Similarly range tables were created that aided the military in calculating distances for gunboat artillery which factored in contextual factors like wind, drift, slope and elevation. These were used well into WW2 but they were limited to the particular type of cannon or shell

Before the invention of actual computers, ‘computer’ was a job-title denoting people who were employed to conduct complex calculations, sometimes with the aid of machinery, but most often not. This persisted until the late 18th century when the word changed to include devices like adding machines.

- Babbage sought to overcome this by designing the Difference Engine which was able to compute polynomials. Complex mathematical expressions that have constants, variables and exponent. He failed to complete it in his lifetime because of the complexity and number of intricate parts required. His model was eventually successfully created in the 90s using his designs and it worked.

- But while he was coming up with this he also conceived of a better and general purpose computing device that wasn’t limited to polynomial calculations → the Analytical Engine.

- It could run operations in sequence and had memory and a primitive printer. It was way ahead of its time and was never completed.

- Ada

#Lovelacewrote hypothetical programs for the Analytical Engine, hence she is considered the world’s first computer programmer.

- At this point then, computing was limited to scientific and engineering disciplines but in 1890, the US govt needed a computer in order to comply with the constitutional stipulation to have a census every ten years. This was getting increasingly difficult with the growing population - it would take more than 13 years to complete. This led to punch cards designed by Herman Hollereth. From this IBM was born